If a picture paints 1,000 words, I am going to endeavour to save a few thousand words.

I am going to assume that you are somewhat familiar with the difference between relational and dimensional models. In case you're not, let me briefly summarize:

Relational models usually deal with OLTP applications and are normalized, thus avoiding redundancy and allowing for reuse, good application data model design, conformity and fast retrieval of small amounts of data, which is typical of online transactional processing applications (OLTP), like CRM, or airline reservations, etc.

In the world of Data Warehousing or its predecessor (DSS: Decision Support Systems), we're typically dealing with less granularity and aggregated data. Having a dimensional model allows us to summarize a fact (sale, order, web visits) by a dimension (month, demographic / other attribute (example: number of orders by sales method (online, direct-mail, store, direct-market ([pyramid], etc.)

Here we see a flattened structure (ORDERS) which is the FACT table. The fact is that an order was taken. The dimensions (attributes) of the order are:

- By whom?

- When?

- Where?

- For whom?

- For how much?

- Delivery details

In a previous post, we discussed conformed dimensions, and one of the typical conformed dimensions is the time dimension, since every entity that uses time will be using the same instance of time. Thus when an order was taken on a given day (say 20th June), it is the same 20th June that we had a staff turnover event, that our IVR received a phone call, that we received some goods into inventory, that we got a return from a previous order, that we had some customer service activity. Since all these facts use date and the date referred to will be the same, we can reasonable safely assume that the Date Dimension will be conformed to the same values between all these facets of the business. Great, now that conformed dimensions is no longer a mystery, let's move on.

However, when we look at a date (I am going to ignore the time of day for this example, but that can come into it too, just think about it, like "before lunch / tea", etc.), as we aggregate and rollup, we can see that the Date Dimension is actually part of a hierarchy of attributes. For instance, any given day falls within a given month, which falls within a given quarter. Quarters are differentiated from each other by the years they refer to (example: Q1 of 2008 is different to Q1 of 2009, etc.).

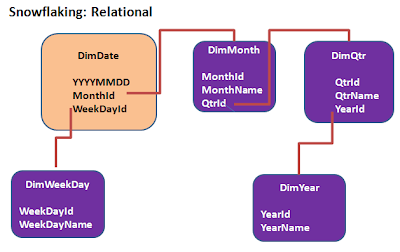

When we start to relate each and every part of the dimensional attributes to new dimensions, we refer to this as a snowflake schema, and it st

arts to resemble a relational model again. Be careful!

arts to resemble a relational model again. Be careful!While there is inherently nothing wrong with this, it does beg the question: why?

The only advantage clearly visible is that the redundancy is removed, thus to describe the quarter, there will only be 1 record with a value for quarter. However, keep in mind that in a data warehouse, we are not interested in normalization or eliminating data redundancies, which means it is OK to break the first law of normalization (1NF [1st Normal Form] states no repeating groups).

The design above represents some performance challenges which will bite you in the rear when dealing with large volumes of data, even medium-sized data volumes. You are creating more joins, more chance of something going wrong, more work for the optimizer, more paths and statistics to consider, back to all the reasons why large-scale aggregation in an OLTP environment is a bad idea. So what to do? What to do?

I'm glad you asked that question, I may just have an answer. By collapsing the upper levels of the dimensional hierarchy into the lowest level of granularity (YYYYMMDD), we will repeat the values for quarter name, year, etc., however we have 1 single table to go to thus dramatically improving performance.

This structure yields massive performance gains, especially true if you are employing a vertical / column-based DW architecture.

If there are 90 days in a quarter and 365 days in a year, instead of the key to the year or quarter dimension, you now have the values repeating inside a single table, at the lowest level of granularity.

I have seen relational modelers struggle with this concept and understandably so, especially if they have had decades of ingrained normalization as part of database design.

With a data warehouse, the generally accepted rules of OLTP don't really apply ( at least they SHOULD not, if designed properly) , so hopefully this article has illustrated in a down-to-earth manner what is a collapsed dimension, whether conformed or not.

Just for recap, it is when the upper node values of the hierarchy are represented (redundantly so) at the lowest level of granularity. This also allows for very easy drill-down and querying.

Have an awesome day.